`

| |

|

Archive for the ‘HTTP’ Category

Wednesday, December 11th, 2013

Just learned this little trick in apache’s .htaccess file to strip out the trailing .html from my URLs:

RewriteEngine on

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME}\.html -f

RewriteRule ^(.*)$ $1.html |

RewriteEngine on

RewriteCond %{REQUEST_FILENAME} !-d

RewriteCond %{REQUEST_FILENAME}\.html -f

RewriteRule ^(.*)$ $1.html After adding the above lines to the .htaccess file, when I request for http://nareshjain.com/about it automatically serves http://nareshjain.com/about.html page. It also handles named anchor’s very well. For example http://nareshjain.com/services/clients#testimonials works perfectly fine as well.

Rationale behind this:

- My URLs are shorter

- Gives me the flexibility to use some web-framework to serve my pages in future.

Posted in Deployment, Hosting, HTTP | No Comments »

Monday, November 25th, 2013

Recently we started getting the following error on the Agile India Registration site:

error number: 35

error message: Unknown SSL protocol error in connection to our_payment_gateway:443 |

error number: 35

error message: Unknown SSL protocol error in connection to our_payment_gateway:443 This error occurs when we try to connect to our Payment Gateway using Curl on the server side (PHP.)

By looking at the error message, it occurred to me, that may be, we are not setting the correct SSL protocol, which is supported by our PG server.

Using SSL Lab’s Analyser, I figured out that our PG server only supports SSL Version 3 and TLS Version 1.

Typically, if we don’t specify the SSL version, Curl figures out the supported SSL version and uses that. However to force Curl to use SSL Version 3, I added the following:

curl_setopt($ch, CURLOPT_SSLVERSION, 3); |

curl_setopt($ch, CURLOPT_SSLVERSION, 3); As expected, it did not make any difference.

The next thing that occurred to me, was may be, the server was picking up a wrong SSL certificate and that might be causing the problem. So I got the SSL certificates from my payment gateway and then starting passing the path to the certificates:

curl_setopt($ch, CURLOPT_CAPATH, PATH_TO_CERT_DIR); |

curl_setopt($ch, CURLOPT_CAPATH, PATH_TO_CERT_DIR); Suddenly, it started working; however not always. Only about 50% of the time.

May be there was some timeout issue, so I added another curl option:

curl_setopt($ch, CURLOPT_TIMEOUT, 0); //Wait forever |

curl_setopt($ch, CURLOPT_TIMEOUT, 0); //Wait forever And now it started working always. However, I noticed that it was very slow. Something was not right.

Then I started using the curl command line to test things. When I issued the following command:

curl -v https://my.pg.server

* About to connect() to my.pg.server port 443 (#0)

* Trying 2001:e48:44:4::d0... connected

* Connected to my.pg.server (2001:e48:44:4::d0) port 443 (#0)

* successfully set certificate verify locations:

* CAfile: /etc/pki/tls/certs/ca-bundle.crt

CApath: none

* SSLv3, TLS handshake, Client hello (1):

* Unknown SSL protocol error in connection to my.pg.server:443

* Closing connection #0

curl: (35) Unknown SSL protocol error in connection to my.pg.server:443 |

curl -v https://my.pg.server

* About to connect() to my.pg.server port 443 (#0)

* Trying 2001:e48:44:4::d0... connected

* Connected to my.pg.server (2001:e48:44:4::d0) port 443 (#0)

* successfully set certificate verify locations:

* CAfile: /etc/pki/tls/certs/ca-bundle.crt

CApath: none

* SSLv3, TLS handshake, Client hello (1):

* Unknown SSL protocol error in connection to my.pg.server:443

* Closing connection #0

curl: (35) Unknown SSL protocol error in connection to my.pg.server:443 I noticed that it was connecting on iPV6 address. I was not sure if our PG server supported iPV6.

Looking at Curl’s man pages, I saw an option to resolve the domain name to IPv4 address. When I tried:

curl -v -4 https://my.pg.server |

curl -v -4 https://my.pg.server it worked!

* About to connect() to my.pg.server port 443 (#0)

* Trying 221.134.101.175... connected

* Connected to my.pg.server (221.134.101.175) port 443 (#0)

* successfully set certificate verify locations:

* CAfile: /etc/pki/tls/certs/ca-bundle.crt

CApath: none

* SSLv3, TLS handshake, Client hello (1):

* SSLv3, TLS handshake, Server hello (2):

* SSLv3, TLS handshake, CERT (11):

* SSLv3, TLS handshake, Server finished (14):

* SSLv3, TLS handshake, Client key exchange (16):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

* SSL connection using RC4-MD5

* Server certificate:

* subject: C=IN; ST=Tamilnadu; L=Chennai; O=Name Private Limited;

OU=Name Private Limited; OU=Terms of use at www.verisign.com/rpa (c)05; CN=my.pg.server

* start date: 2013-08-14 00:00:00 GMT

* expire date: 2015-10-13 23:59:59 GMT

* subjectAltName: my.pg.server matched

* issuer: C=US; O=VeriSign, Inc.; OU=VeriSign Trust Network;

OU=Terms of use at https://www.verisign.com/rpa (c)10;

CN=VeriSign Class 3 International Server CA - G3

* SSL certificate verify ok.

> GET / HTTP/1.1

... |

* About to connect() to my.pg.server port 443 (#0)

* Trying 221.134.101.175... connected

* Connected to my.pg.server (221.134.101.175) port 443 (#0)

* successfully set certificate verify locations:

* CAfile: /etc/pki/tls/certs/ca-bundle.crt

CApath: none

* SSLv3, TLS handshake, Client hello (1):

* SSLv3, TLS handshake, Server hello (2):

* SSLv3, TLS handshake, CERT (11):

* SSLv3, TLS handshake, Server finished (14):

* SSLv3, TLS handshake, Client key exchange (16):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

* SSLv3, TLS change cipher, Client hello (1):

* SSLv3, TLS handshake, Finished (20):

* SSL connection using RC4-MD5

* Server certificate:

* subject: C=IN; ST=Tamilnadu; L=Chennai; O=Name Private Limited;

OU=Name Private Limited; OU=Terms of use at www.verisign.com/rpa (c)05; CN=my.pg.server

* start date: 2013-08-14 00:00:00 GMT

* expire date: 2015-10-13 23:59:59 GMT

* subjectAltName: my.pg.server matched

* issuer: C=US; O=VeriSign, Inc.; OU=VeriSign Trust Network;

OU=Terms of use at https://www.verisign.com/rpa (c)10;

CN=VeriSign Class 3 International Server CA - G3

* SSL certificate verify ok.

> GET / HTTP/1.1

... Long story short, it turns out that passing -4 or –ipv4 curl option, forces iPV4 usage and this solved the problem.

So I removed everything else and just added the following option and things are back to normal:

curl_setopt($ch, CURLOPT_IPRESOLVE, CURL_IPRESOLVE_V4); |

curl_setopt($ch, CURLOPT_IPRESOLVE, CURL_IPRESOLVE_V4);

Posted in Deployment, Hacking, Hosting, HTTP, Networking | 7 Comments »

Wednesday, October 9th, 2013

Its pretty common in webapps to use Crontab to check for certain thresholds at regular intervals and send notifications if the threshold is crossed. Typically I would expose a secret URL and use WGet to invoke that URL via a cron.

The following cron will invoke the secret_url every hour.

0 */1 * * * /usr/bin/wget "http://nareshjain.com/cron/secret_url" |

0 */1 * * * /usr/bin/wget "http://nareshjain.com/cron/secret_url" Since this is running as a cron, we don’t want any output. So we can add the -q and –spider command line parameters. Like:

0 */1 * * * /usr/bin/wget -q --spider "http://nareshjain.com/cron/secret_url" |

0 */1 * * * /usr/bin/wget -q --spider "http://nareshjain.com/cron/secret_url" –spider command line parameter is very handy, it is used for a Dry-run .i.e. check if the URL actually exits. This way you don’t need to do things like:

wget -q "http://nareshjain.com/cron/secret_url" -O /dev/null |

wget -q "http://nareshjain.com/cron/secret_url" -O /dev/null But when you run this command from your terminal:

wget -q --spider "http://nareshjain.com/cron/secret_url"

Spider mode enabled. Check if remote file exists.

--2013-10-09 09:05:25-- http://nareshjain.com/cron/secret_url

Resolving nareshjain.com... 223.228.28.190

Connecting to nareshjain.com|223.228.28.190|:80... connected.

HTTP request sent, awaiting response... 404 Not Found

Remote file does not exist -- broken link!!! |

wget -q --spider "http://nareshjain.com/cron/secret_url"

Spider mode enabled. Check if remote file exists.

--2013-10-09 09:05:25-- http://nareshjain.com/cron/secret_url

Resolving nareshjain.com... 223.228.28.190

Connecting to nareshjain.com|223.228.28.190|:80... connected.

HTTP request sent, awaiting response... 404 Not Found

Remote file does not exist -- broken link!!! You use the same URL in your browser and sure enough, it actually works. Why is it not working via WGet then?

The catch is, –spider sends a HEAD HTTP request instead of a GET request.

You can check your access log:

my.ip.add.ress - - [09/Oct/2013:02:46:35 +0000] "HEAD /cron/secret_url HTTP/1.0" 404 0 "-" "Wget/1.11.4" |

my.ip.add.ress - - [09/Oct/2013:02:46:35 +0000] "HEAD /cron/secret_url HTTP/1.0" 404 0 "-" "Wget/1.11.4" If your secret URL is pointing to an actual file (like secret.php) then it does not matter, you should not see any error. However, if you are using any framework for specifying your routes, then you need to make sure you have a handler for HEAD request instead of GET.

Posted in Hosting, HTTP, Tips, Tools | No Comments »

Wednesday, July 17th, 2013

On my latest pet project, SlideShare Presentation Stack, I wanted to make an AJAX call to SlideShare.net to fetch all the details of the given slideshare user. One easy way to get this information is to request for the user’s RSS feed. However the RSS feed is only served as xml. And due to same-origin policy, I can’t make an AJAX call from my javascript to retrive the XML. I wish SlideShare support JSONP for fetching the user’s RSS feed. However they don’t. They do expose an API with rate limiting, but I wanted something simple. I found the following hack to work-around this issue:

<script type="text/javascript">

simpleAJAXLib = {

init: function () {

this.fetchJSON('http://www.slideshare.net/rss/user/nashjain');

},

fetchJSON: function (url) {

var root = 'https://query.yahooapis.com/v1/public/yql?q=';

var yql = 'select * from xml where url="' + url + '"';

var proxy_url = root + encodeURIComponent(yql) + '&format=json&diagnostics=false&callback=simpleAJAXLib.display';

document.getElementsByTagName('body')[0].appendChild(this.jsTag(proxy_url));

},

jsTag: function (url) {

var script = document.createElement('script');

script.setAttribute('type', 'text/javascript');

script.setAttribute('src', url);

return script;

},

display: function (results) {

// do the necessary stuff

document.getElementById('demo').innerHTML = "Result = " + (results.error ? "Internal Server Error!" : JSON.stringify(results.query.results));

}

}

</script> |

<script type="text/javascript">

simpleAJAXLib = {

init: function () {

this.fetchJSON('http://www.slideshare.net/rss/user/nashjain');

},

fetchJSON: function (url) {

var root = 'https://query.yahooapis.com/v1/public/yql?q=';

var yql = 'select * from xml where url="' + url + '"';

var proxy_url = root + encodeURIComponent(yql) + '&format=json&diagnostics=false&callback=simpleAJAXLib.display';

document.getElementsByTagName('body')[0].appendChild(this.jsTag(proxy_url));

},

jsTag: function (url) {

var script = document.createElement('script');

script.setAttribute('type', 'text/javascript');

script.setAttribute('src', url);

return script;

},

display: function (results) {

// do the necessary stuff

document.getElementById('demo').innerHTML = "Result = " + (results.error ? "Internal Server Error!" : JSON.stringify(results.query.results));

}

}

</script> Trigger the AJAX call asynchronously by adding the following snippet where ever required:

<script type="text/javascript">

simpleAJAXLib.init();

</script> |

<script type="text/javascript">

simpleAJAXLib.init();

</script> How does this actually work?

- First, I would recommend you read my previous blog which explains how to make JSONP AJAX calls without using any javascript framework or library.

- Once you understand that, the most important piece you need to understand is how we’ve used Yahoo! Query Language (YQL) as a proxy to retrieve your XML request to be served via JSONP.

- Use the following steps:

- Write a SQL statement like select * from xml where url=’your_xml_returning_url’ and URI encode it.

- Append your yql statement to YQL url – https://query.yahooapis.com/v1/public/yql?q=

- Finally specify the format as json (ex: format=json) and a callback function name, which will be invoked by the browser once the results are retrieved (ex: callback=simpleAJAXLib.display)

- In the callback function, you can access the xml data from this JSON object: query.results

Posted in Hacking, HTTP, JavaScript | 9 Comments »

Wednesday, July 17th, 2013

On my latest pet project, SlideShare Presentation Stack, I wanted to make an AJAX call to fetch data from another domain. However due to same-origin policy, I can’t make a simple AJAX call, I need to use JSONP. JQuery and bunch of other libraries provide a very convenient way of making JSONP calls. However to minimize the dependency of my javascript library, I did not want to use any javascript framework or library. Also I wanted the code to be supported by all browsers that supported javascript.

One option was to use XMLHttpRequest. But I wanted to explore if there was any other option, just then I discovered this totally interesting way of making JSONP AJAX call. Code below:

<script type="text/javascript">

simpleAJAXLib = {

init: function () {

this.fetchViaJSONP('your_url_goes_here');

},

fetchViaJSONP: function (url) {

url += '?format=jsonp&jsonp_callback=simpleAJAXLib.handleResponse';

document.getElementsByTagName('body')[0].appendChild(this.jsTag(url));

},

jsTag: function (url) {

var script = document.createElement('script');

script.setAttribute('type', 'text/javascript');

script.setAttribute('src', url);

return script;

},

handleResponse: function (results) {

// do the necessary stuff; for example

document.getElementById('demo').innerHTML = "Result = " + (results.error ? "Internal Server Error!" : results.response);

}

};

</script> |

<script type="text/javascript">

simpleAJAXLib = {

init: function () {

this.fetchViaJSONP('your_url_goes_here');

},

fetchViaJSONP: function (url) {

url += '?format=jsonp&jsonp_callback=simpleAJAXLib.handleResponse';

document.getElementsByTagName('body')[0].appendChild(this.jsTag(url));

},

jsTag: function (url) {

var script = document.createElement('script');

script.setAttribute('type', 'text/javascript');

script.setAttribute('src', url);

return script;

},

handleResponse: function (results) {

// do the necessary stuff; for example

document.getElementById('demo').innerHTML = "Result = " + (results.error ? "Internal Server Error!" : results.response);

}

};

</script> Trigger the AJAX call asynchronously by adding the following snippet where ever required:

<script type="text/javascript">

simpleAJAXLib.init();

</script> |

<script type="text/javascript">

simpleAJAXLib.init();

</script> How does this actually work?

Its simple:

- Construct the AJAX URL (any URL that support JSONP) and then append the callback function name to it. (For ex: http://myajaxcall.com?callback=simpleAJAXLib.handleResponse.) Also don’t forget to mention JSONP as your format.

- Create a new javascript html tag and add this URL as the src of the javascript tag

- Append this new javascript tag to the body. This will cause the browser to invoke the URL

- On the server side: In addition to returning the data in the expected formart, wrap the data as a paramater to the actual backback function and return it with a javascript header. The browser would eval this returned javascript and hence your callback function would be invoked. See server side code example below:

$data = array("response"=>"your_data");

header('Content-Type: text/javascript');

echo $_GET['jsonp_callback'] ."(".json_encode($data).")"; |

$data = array("response"=>"your_data");

header('Content-Type: text/javascript');

echo $_GET['jsonp_callback'] ."(".json_encode($data).")"; P.S: Via this exercise, I actually understood how JSONP works.

If you want to call an API, which does not support JSONP, you might be interested in reading my other blog post on Fetching Cross Domain XML in JavaScript, which uses Yahoo Query Language (YQL) as a proxy to work around the same-origin-policy for xml documents.

Posted in Hacking, HTTP, JavaScript | 2 Comments »

Saturday, March 23rd, 2013

If you are building a web-app, which needs to use OAuth for user authentication across Facebook, Google, Twitter and other social media, testing the app locally, on your development machine, can be a real challenge.

On your local machine, the app URL might look like http://localhost/my_app/login.xxx while in the production environment the URL would be http://my_app.com/login.xxx

Now, when you try to test the OAuth integration, using Facebook (or any other resource server) it will not work locally. Because when you create the facebook app, you need to give the URL where the code will be located. This is different on local and production environment.

So how do you resolve this issue?

One way to resolve this issue is to set up a Virtual Host on your machine, such that your local environment have the same URL as the production code.

To achieve this, following the 4 simple steps:

1. Map your domain name to your local IP address

Add the following line to /etc/hosts file

127.0.0.1 my_app.com

Now when you request for http://my_app.com in your browser, it will direct the request to your local machine.

2. Activate virtual hosts in apache

Uncomment the following line (remove the #) in /private/etc/apache2/httpd.conf

#Include /private/etc/apache2/extra/httpd-vhosts.conf

3. Add the virtual host in apache

Add the following VHost entry to the /private/etc/apache2/extra/httpd-vhosts.conf file

<VirtualHost *:80>

DocumentRoot "/Users/username/Sites/my_app"

ServerName my_app.com

</VirtualHost> |

<VirtualHost *:80>

DocumentRoot "/Users/username/Sites/my_app"

ServerName my_app.com

</VirtualHost> 4. Restart Apache

System preferences > “Sharing” > Uncheck the box “Web Sharing” – apache will stop & then check it again – apache will start.

Now, http://my_app.com/login.xxx will be served locally.

Posted in Deployment, Hosting, HTTP, Tips | No Comments »

Thursday, November 3rd, 2011

Often we need to create short, more expressive URLs. If you are using Nginx as a reverse proxy, one easy way to create short URLs is to define different locations under the respective server directive and then do a permanent rewrite to the actual URL in the Nginx conf file as follows:

http {

....

server {

listen 80;

server_name www.agilefaqs.com agilefaqs.com;

server_name_in_redirect on;

port_in_redirect on;

location ^~ /training {

rewrite ^ http://agilefaqs.com/a/long/url/$uri permanent;

}

location ^~ /coaching {

rewrite ^ http://agilecoach.in$uri permanent;

}

location = /blog {

rewrite ^ http://blogs.agilefaqs.com/show?action=posts permanent;

}

location / {

root /path/to/static/web/pages;

index index.html;

}

location ~* ^.+\.(gif|jpg|jpeg|png|css|js)$ {

add_header Cache-Control public;

expires max;

root /path/to/static/content;

}

}

} |

http {

....

server {

listen 80;

server_name www.agilefaqs.com agilefaqs.com;

server_name_in_redirect on;

port_in_redirect on;

location ^~ /training {

rewrite ^ http://agilefaqs.com/a/long/url/$uri permanent;

}

location ^~ /coaching {

rewrite ^ http://agilecoach.in$uri permanent;

}

location = /blog {

rewrite ^ http://blogs.agilefaqs.com/show?action=posts permanent;

}

location / {

root /path/to/static/web/pages;

index index.html;

}

location ~* ^.+\.(gif|jpg|jpeg|png|css|js)$ {

add_header Cache-Control public;

expires max;

root /path/to/static/content;

}

}

} I’ve been using this feature of Nginx for over 2 years, but never actually fully understood the different prefixes for the location directive.

If you check Nginx’s documentation for the syntax of the location directive, you’ll see:

location [=|~|~*|^~|@] /uri/ { ... } |

location [=|~|~*|^~|@] /uri/ { ... } The URI can be a literal string or a regular expression (regexp).

For regexps, there are two prefixes:

- “~” for case sensitive matching

- “~*” for case insensitive matching

If we have a list of locations using regexps, Nginx checks each location in the order its defined in the configuration file. The first regexp to match the requested url will stop the search. If no regexp matches are found, then it uses the longest matching literal string.

For example, if we have the following locations:

location ~* /.*php$ {

rewrite ^ http://content.agilefaqs.com$uri permanent;

}

location ~ /.*blogs.* {

rewrite ^ http://blogs.agilefaqs.com$uri permanent;

}

location /blogsin {

rewrite ^ http://agilecoach.in/blog$uri permanent;

}

location /blogsinphp {

root /path/to/static/web/pages;

index index.html;

} |

location ~* /.*php$ {

rewrite ^ http://content.agilefaqs.com$uri permanent;

}

location ~ /.*blogs.* {

rewrite ^ http://blogs.agilefaqs.com$uri permanent;

}

location /blogsin {

rewrite ^ http://agilecoach.in/blog$uri permanent;

}

location /blogsinphp {

root /path/to/static/web/pages;

index index.html;

} If the requested URL is http://agilefaqs.com/blogs/index.php, Nginx will permanently redirect the request to http://content.agilefaqs.com/blogs/index.php. Even though both regexps (/.*php$ and /.*blogs.*) match the requested URL, the first satisfying regexp (/.*php$) is picked and the search is terminated.

However let’s say the requested URL was http://agilefaqs.com/blogsinphp, Nginx will first consider /blogsin location and then /blogsinphp location. If there were more literal string locations, it would consider them as well. In this case, regexp locations would be skipped since /blogsinphp is the longest matching literal string.

If you want to slightly speed up this process, you should use the “=” prefix. .i.e.

location = /blogsinphp {

root /path/to/static/web/pages;

index index.html;

} |

location = /blogsinphp {

root /path/to/static/web/pages;

index index.html;

} and move this location right at the top of other locations. By doing so, Nginx will first look at this location, if its an exact literal string match, it would stop right there without looking at any other location directives.

However note that if http://agilefaqs.com/my/blogsinphp is requested, none of the literal strings will match and hence the first regexp (/.*php$) would be picked up instead of the string literal.

And if http://agilefaqs.com/blogsinphp/my is requested, again, none of the literal strings will match and hence the first matching regexp (/.*blogs.*) is selected.

What if you don’t know the exact string literal, but you want to avoid checking all the regexps?

We can achieve this by using the “^~” prefix as follows:

location = /blogsin {

rewrite ^ http://agilecoach.in/blog$uri permanent;

}

location ^~ /blogsinphp {

root /path/to/static/web/pages;

index index.html;

}

location ~* /.*php$ {

rewrite ^ http://content.agilefaqs.com$uri permanent;

}

location ~ /.*blogs.* {

rewrite ^ http://blogs.agilefaqs.com$uri permanent;

} |

location = /blogsin {

rewrite ^ http://agilecoach.in/blog$uri permanent;

}

location ^~ /blogsinphp {

root /path/to/static/web/pages;

index index.html;

}

location ~* /.*php$ {

rewrite ^ http://content.agilefaqs.com$uri permanent;

}

location ~ /.*blogs.* {

rewrite ^ http://blogs.agilefaqs.com$uri permanent;

} Now when we request http://agilefaqs.com/blogsinphp/my, Nginx checks the first location (= /blogsin), /blogsinphp/my is not an exact match. It then looks at (^~ /blogsinphp), its not an exact match, however since we’ve used ^~ prefix, this location is selected by discarding all the remaining regexp locations.

However if http://agilefaqs.com/blogsin is requested, Nginx will permanently redirect the request to http://agilecoach.in/blog/blogsin even without considering any other locations.

To summarize:

- Search stops if location with “=” prefix has an exact matching literal string.

- All remaining literal string locations are matched. If the location uses “^~” prefix, then regexp locations are not searched. The longest matching location with “^~” prefix is used.

- Regexp locations are matched in the order they are defined in the configuration file. Search stops on first matching regexp.

- If none of the regexp matches, the longest matching literal string location is used.

Even though the order of the literal string locations don’t matter, its generally a good practice to declare the locations in the following order:

- start with all the “=” prefix,

- followed by “^~” prefix,

- then all the literal string locations

- finally all the regexp locations (since the order matters, place them with the most likely ones first)

BTW adding a break directive inside any of the location directives has not effect.

Posted in Deployment, Hosting, HTTP, SEO | No Comments »

Thursday, July 21st, 2011

Currently at Industrial Logic we use Nginx as a reverse proxy to our Tomcat web server cluster.

Today, while running a particular report with large dataset, we started getting timeouts errors. When we looked at the Nginx error.log, we found the following error:

[error] 26649#0: *9155803 upstream timed out (110: Connection timed out)

while reading response header from upstream,

client: xxx.xxx.xxx.xxx, server: elearning.industriallogic.com, request:

"GET our_url HTTP/1.1", upstream: "internal_server_url",

host: "elearning.industriallogic.com", referrer: "requested_url" |

[error] 26649#0: *9155803 upstream timed out (110: Connection timed out)

while reading response header from upstream,

client: xxx.xxx.xxx.xxx, server: elearning.industriallogic.com, request:

"GET our_url HTTP/1.1", upstream: "internal_server_url",

host: "elearning.industriallogic.com", referrer: "requested_url" After digging around for a while, I discovered that our web server is taking more than 60 secs to respond. Nginx has a directive called proxy_read_timeout which defaults to 60 secs. It determines how long nginx will wait to get the response to a request.

In nginx.conf file, setting proxy_read_timeout to 120 secs solved our problem.

server {

listen 80;

server_name elearning.industriallogic.com;

server_name_in_redirect off;

port_in_redirect off;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Real-Host $host;

proxy_read_timeout 120;

...

}

...

} |

server {

listen 80;

server_name elearning.industriallogic.com;

server_name_in_redirect off;

port_in_redirect off;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Real-Host $host;

proxy_read_timeout 120;

...

}

...

}

Posted in Continuous Deployment, Deployment, HTTP | 8 Comments »

Sunday, July 10th, 2011

Recently an important client of Industrial Logic’s eLearning reported that access to our Agile eLearning website was extremely slow (23+ secs per page load.) This came as a shock; we’ve never seen such poor performance from any part of the world. Besides, a 23+ secs page load basically puts our eLearning in the category of “useless junk”.

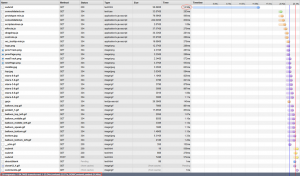

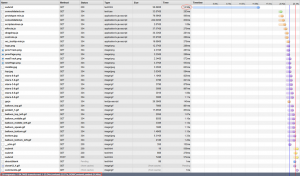

| From China |

From India |

|

|

Notice that from China its taking 23.34 secs, while from any other country it takes less than 3 secs to load the page. Clearly the problem was when the request originated from China. We suspected network latency issues. So we tried a traceroute.

Sure enough, the traceroute does look suspicious. But then soon we realized the since traceroute and web access (http) uses different protocols, they could use completely different routes to reach the destination. (In fact, China has a law by which access to all public websites should go through the Chinese Firewall [The Great Wall]. VPN can only be used for internal server access.)

Ahh..The Great Wall! Could The Great Wall have something to do with this issue?

To nail the issue, we used a VPN from China to test our site. Great, with the VPN, we were getting 3 secs page load.

After cursing The Great Wall; just as we were exploring options for hosting our server inside The Great Wall, we noticed something strange. Certain pages were loading faster than others consistently. On further investigation, we realized that all pages served from our Windows servers were slower by at least 14 secs compared to pages served by our Linux servers.

Hmmm…somehow the content served by our Windows Server is triggering a check inside the Great Wall.

What keywords could the Great Wall be checking for?

Well, we don’t have any option other than brute forcing the keywords.

Wait a sec….we serve our content via HTTPS, could the Great Wall be looking for keywords inside a HTTPS stream? Hope not!

May be it has to do with some difference in the headers, since most firewalls look at header info to take decisions.

But after thinking a little more, it occurred to me that there cannot be any header difference (except one parameter in the URL and may be something in the Cookie.) That’s because we use Nginx as our reverse proxy. The actual content being served from Windows or Linux servers should be transparent to clients.

Just to be sure that something was not slipping by, we decided to do a small experiment. Have the exact same content served by both Windows and Linux box and see if it made any difference. Interestingly the exact same content served from Windows server is still slow by at least 14 secs.

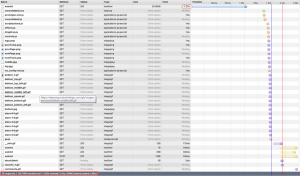

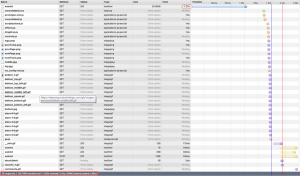

Let’s look at the server response from the browser again:

Notice the 15 secs for the initial response to the submit request. This happens only when the request is served by the Windows Server.

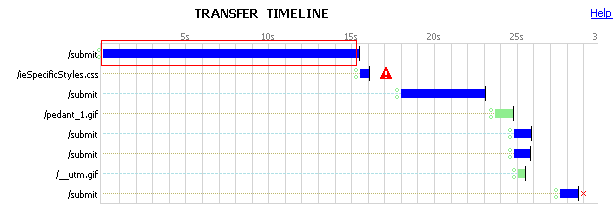

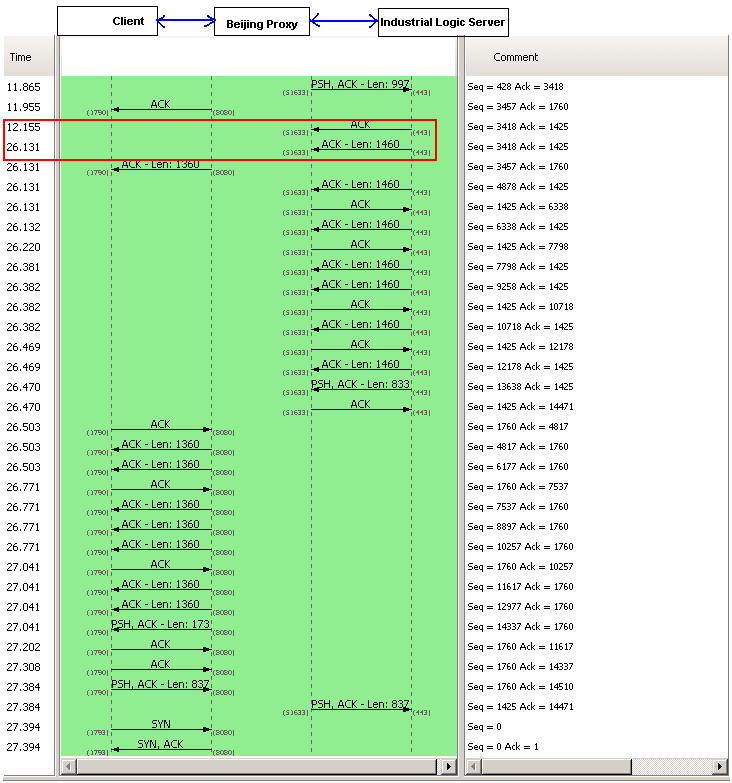

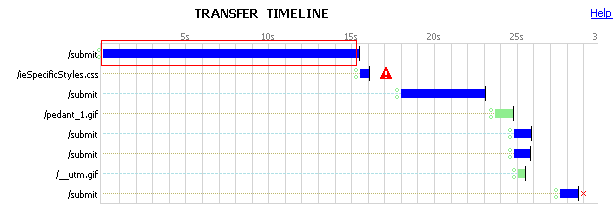

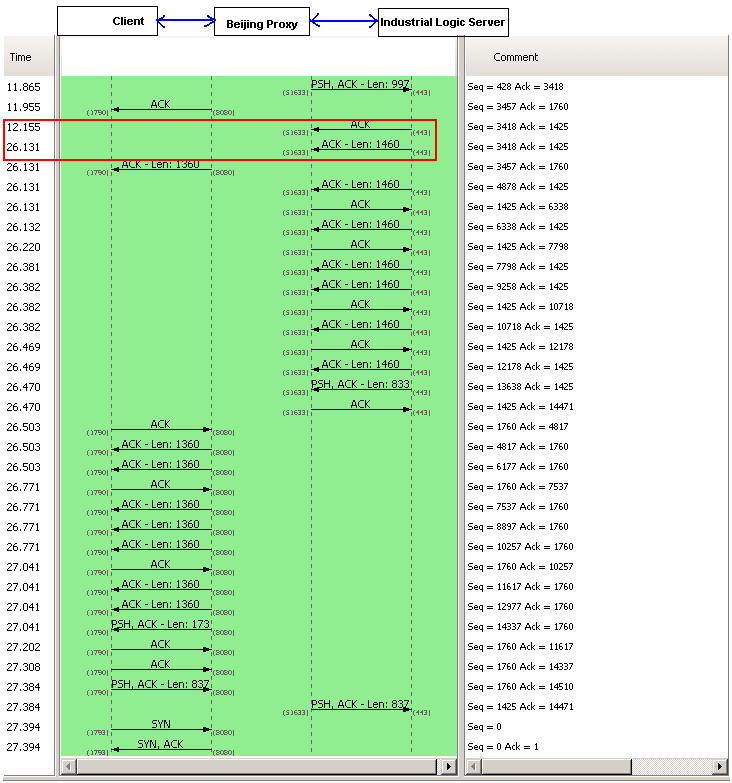

We had to look deeper into where those 15 secs are coming from. So we decided to take a deeper look, by using some network analysis tool. And look what we found:

A 14+ sec response from our server side. However this happens only when the request is coming from China. Since our application does not have any country specific code, who else could be interfering with this? There are 3 possibilities:

- Firewall settings on the Windows Server: It was easy to rule this out, since we had disabled the firewall for all requests coming from our Reverse Proxy Server.

- Our Datacenter Network Settings: To prevent against DDOS Attacks from Chinese Hackers. A possibility.

- Low level Windows Network Stack: God knows what…

We opened a ticket with our Datacenter. They responded back with their standard response (from a template) saying: “Please check with your client’s ISP.”

Just as I was loosing hope, I explained this problem to Devdas. When he heard 14 secs delay, he immediately told me that it sounds like a standard Reverse DNS Lookup timeout.

I was pretty sure we did not do any reverse DNS lookup. Besides if we did it in our code, both Windows and Linux Servers should have the same delay.

To verify this, we installed Wire Shark on our Windows servers to monitor Reverse DNS Lookup. Sure enough, nothing showed up.

I was loosing hope by the minute. Just out of curiosity, one night, I search our whole code base for any reverse DNS lookup code. Surprise! Surprise!

I found a piece of logging code, which was taking the User IP and trying to find its host name. That has to be the culprit. But then why don’t we see the same delay on Linux server?

On further investigation, I figured that our Windows Server did not have any DNS servers configured for the private Ethernet Interface we were using, while Linux had it.

Eliminated the useless logging code and configured the right DNS servers on our Windows Servers. And guess what, all request from Windows and Linux now are served in less than 2 secs. (better than before, because we eliminated a useless reverse DNS lookup, which was timing out for China.)

This was fun! Great learning experience.

Posted in Deployment, Hosting, HTTP, Linux, Networking, Windows | 1 Comment »

Thursday, June 2nd, 2011

We don’t have something like LiveHTTPHeaders in Chrome. How do we then view the raw HTTP request and response headers in Chrome?

- Open a new tab and enter about:net-internals as the URL

- Go to Events tab

- Enter URL_REQUEST in the Filter box to filter down to only URL Request Events

- Pick the event for your URL

- In the right-pane, look at the Log tab

- The HTTP Request header is listed under HTTP_TRANSACTION_SEND_REQUEST_HEADERS

- The HTTP Response header is listed under HTTP_TRANSACTION_READ_RESPONSE_HEADERS

Posted in HTTP | 4 Comments »

|