`

| |

|

Sunday, July 8th, 2012

| A |

Acceptance Criteria/Test, Automation, A/B Testing, Adaptive Planning, Appreciative inquiry |

| B |

Backlog, Business Value, Burndown, Big Visible Charts, Behavior Driven Development, Bugs, Build Monkey, Big Design Up Front (BDUF) |

| C |

Continuous Integration, Continuous Deployment, Continuous Improvement, Celebration, Capacity Planning, Code Smells, Customer Development, Customer Collaboration, Code Coverage, Cyclomatic Complexity, Cycle Time, Collective Ownership, Cross functional Team, C3 (Complexity, Coverage and Churn), Critical Chain |

| D |

Definition of Done (DoD)/Doneness Criteria, Done Done, Daily Scrum, Deliverables, Dojos, Drum Buffer Rope |

| E |

Epic, Evolutionary Design, Energized Work, Exploratory Testing |

| F |

Flow, Fail-Fast, Feature Teams, Five Whys |

| G |

Grooming (Backlog) Meeting, Gemba |

| H |

Hungover Story |

| I |

Impediment, Iteration, Inspect and Adapt, Informative Workspace, Information radiator, Immunization test, IKIWISI (I’ll Know It When I See It) |

| J |

Just-in-time |

| K |

Kanban, Kaizen, Knowledge Workers |

| L |

Last responsible moment, Lead time, Lean Thinking |

| M |

Minimum Viable Product (MVP), Minimum Marketable Features, Mock Objects, Mistake Proofing, MOSCOW Priority, Mindfulness, Muda |

| N |

Non-functional Requirements, Non-value add |

| O |

Onsite customer, Opportunity Backlog, Organizational Transformation, Osmotic Communication |

| P |

Pivot, Product Discovery, Product Owner, Pair Programming, Planning Game, Potentially shippable product, Pull-based-planning, Predictability Paradox |

| Q |

Quality First, Queuing theory |

| R |

Refactoring, Retrospective, Reviews, Release Roadmap, Risk log, Root cause analysis |

| S |

Simplicity, Sprint, Story Points, Standup Meeting, Scrum Master, Sprint Backlog, Self-Organized Teams, Story Map, Sashimi, Sustainable pace, Set-based development, Service time, Spike, Stakeholder, Stop-the-line, Sprint Termination, Single Click Deploy, Systems Thinking, Single Minute Setup, Safe Fail Experimentation |

| T |

Technical Debt, Test Driven Development, Ten minute build, Theme, Tracer bullet, Task Board, Theory of Constraints, Throughput, Timeboxing, Testing Pyramid, Three-Sixty Review |

| U |

User Story, Unit Tests, Ubiquitous Language, User Centered Design |

| V |

Velocity, Value Stream Mapping, Vision Statement, Vanity metrics, Voice of the Customer, Visual controls |

| W |

Work in Progress (WIP), Whole Team, Working Software, War Room, Waste Elimination |

| X |

xUnit |

| Y |

YAGNI (You Aren’t Gonna Need It) |

| Z |

Zero Downtime Deployment, Zen Mind |

Posted in Agile | No Comments »

Tuesday, June 21st, 2011

Time and again, I find developers (including myself) over-relying on our automated tests. esp. unit tests which run fast and reliably.

In the urge to save time today, we want to automate everything (which is good), but then we become blindfolded to this desire. Only later to realize that we’ve spent a lot more time debugging issues that our automated tests did not catch (leave the embarrassment caused because of this aside.)

I’ve come to believe:

A little bit of manual, sanity testing by the developer before checking in code can save hours of debugging and embarrassment.

Again this is contextual and needs personal judgement based on the nature of the change one makes to the code.

In addition to quickly doing some manual, sanity test on your machine before checking, it can be extremely important to do some exploratory testing as well. However, not always we can test things locally. In those cases, we can test them on a staging environment or on a live server. But its important for you to discover the issue much before your users encounter it.

P.S: Recently we faced Error CS0234: The type or namespace name ‘Specialized’ does not exist in the namespace ‘System.Collections’ issue, which prompted me to write this blog post.

Posted in Agile, Continuous Deployment, Deployment, Programming, Testing | 2 Comments »

Monday, February 9th, 2009

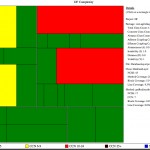

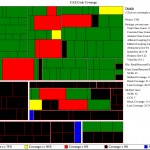

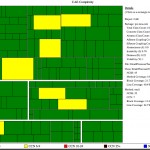

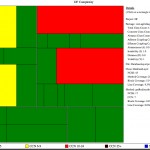

Some time back, I spent 1 Week helping a project (Server written in Java) clear its Technical Debt. The code base is tiny because it leverages lot of existing server framework to do its job. This server handles extremely high volumes of data & request and is a very important part of our server infrastructure. Here are some results:

| Topic |

Before |

After |

| Project Size |

Production Code

- Package =1

- Classes =4

- Methods = 15 (average 3.75/class)

- LOC = 172 (average 11.47/method and 43/class)

- Average Cyclomatic Complexity/Method = 3.27

Test Code

- Package =0

- Classes = 0

- Methods = 0

- LOC = 0

|

Production Code

- Package = 4

- Classes =13

- Methods = 68 (average 5.23/class)

- LOC = 394 (average 5.79/method and 30.31/class)

- Average Cyclomatic Complexity/Method = 1.58

Test Code

- Package = 6

- Classes = 11

- Methods = 90

- LOC =458

|

| Code Coverage |

- Line Coverage: 0%

- Block Coverage: 0%

|

- Line Coverage: 96%

- Block Coverage: 97%

|

| Cyclomatic Complexity |

|

|

| Obvious Dead Code |

Following public methods:

- class DatabaseLayer: releasePool()

Total: 1 method in 1 class |

Following public methods:

- class DFService: overloaded constructor

Total: 1 method in 1 class

Note: This method is required by the tests. |

| Automation |

|

|

| Version Control Usage |

- Average Commits Per Day = 0

- Average # of Files Changed Per Commit = 12

|

- Average Commits Per Day = 7

- Average # of Files Changed Per Commit = 4

|

| Coding Convention Violation |

96 |

0 |

Another similar report.

Posted in Agile, Coaching, Design, Metrics, Planning, Programming, Testing, Tools | No Comments »

Monday, February 2nd, 2009

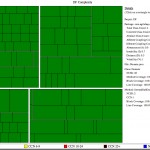

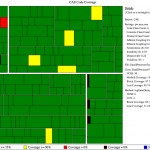

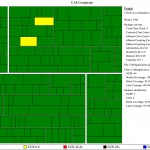

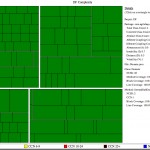

Recently I spent 2 Weeks helping a project clear its Technical Debt. Here are some results:

| Topic |

Before |

After |

| Project Size |

Production Code

- Package = 7

- Classes = 23

- Methods = 104 (average 4.52/class)

- LOC = 912 (average 8.77/method and 39.65/class)

- Average Cyclomatic Complexity/Method = 2.04

Test Code

- Package = 1

- Classes = 10

- Methods = 92

- LOC = 410

|

Production Code

- Package = 4

- Classes = 20

- Methods = 89 (average 4.45/class)

- LOC = 627 (average 7.04/method and 31.35/class)

- Average Cyclomatic Complexity/Method = 1.79

Test Code

- Package = 4

- Classes = 18

- Methods = 120

- LOC = 771

|

| Code Coverage |

- Line Coverage: 46%

- Block Coverage: 43%

|

- Line Coverage: 94%

- Block Coverage: 96%

|

| Cyclomatic Complexity |

|

|

| Obvious Dead Code |

Following public methods:

- class CryptoUtils: String getSHA1HashOfString(String), String encryptString(String), String decryptString(String)

- class DbLogger: writeToTable(String, String)

- class DebugUtils: String convertListToString(java.util.List), String convertStrArrayToString(String)

- class FileSystem: int getNumLinesInFile(String)

Total: 7 methods in 4 classes |

Following public methods:

- class BackgroundDBWriter: stop()

Total: 1 method in 1 class

Note: This method is required by the tests. |

| Automation |

|

|

| Version Control Usage |

- Average Commits Per Day = 1

- Average # of Files Changed Per Commit = 2

|

- Average Commits Per Day = 4

- Average # of Files Changed Per Commit = 9

Note: Since we are heavily refactoring, lots of files are touched for each commit. But the frequency of commit is fairly high to ensure we are not taking big leaps. |

| Coding Convention Violation |

976 |

0 |

Something interesting to watch out is how the production code becomes more crisp (fewer packages, classes and LOC) and how the amount of test code becomes greater than the production code.

Another similar report.

Posted in Agile, Coaching, Design, Metrics, Programming, Testing, Tools | 4 Comments »

|