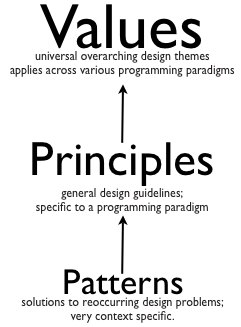

Stay away from Design Patterns; master Value, Principles and Basics Skills first

Thursday, June 7th, 2012Unfortunately many developers think they already know enough values and principles, so its not worth spending anytime learning or practicing them. Instead they want to learn the holy grail of software design – “the design patterns.”

While the developers might think, design patterns is the ultimate nirvana of designing, I would say, they really need to learn how to think in their paradigm plus internalize the values and principles first. I would suggest developers should focus on the basics for at least 6 months before going near patterns. Else they would gain a very superficial knowledge about the patterns, and won’t be able to appreciate its true value or applicability.

Any time I get a request for teaching OO design patterns, I insist that we start the workshop with a recap of the values and principles. Time and again, I find developers in the class agreeing that they are having a hard time thinking in Objects. Most developers are still very much programming in the procedural way. Trying to retrofit objects, but not really thinking in-terms of objects and responsibilities.

Recently a student in my class shared that:

I thought we were doing Object Oriented Programming, but clearly its a paradigm shift that we still need to make.

On the first day, I start the class with simple labs, in the first 4-5 labs, you can see the developers struggle to come up with a simple object-oriented solution for basic design problems. They end up with a very procedural solution:

- main class with a bunch of static methods or

- data holder classes with public accessors and other or/er classes pulling that data to do some logic.

- very heavy use of if-else or switch

Its sad that teams don’t understand nor give importance to the following important OO concepts:

- Data and Logic together – Encapsulation (Everyone knows the definition of encapsulation, but how to put it in proper use, is always a big question mark.) Many developers write public Getters/Setters or Accessors by default on their classes. And are shocked to realize that it breaks encapsulation.

- Inheritance is the last option: It is quite common to see solutions where slight variation in data is modeled as a hierarchy of classes. The child classes have no behavior in them and often leads to a class explosion.

- Composition over Inheritance – In various labs, developers go down the route of using Inheritance to reuse behavior instead of thinking of a composition based design. Sadly, inheritance based solutions have various flaws that the team can’t realize, until highlighted. Coming up with a good inheritance based design, when the parent is mutable, it extremely tricky.

- Focus on smart data-structure: The developers have a tough time coming up with smart data-structure and putting logic around it. Via various labs, I try to demonstrate how designing smart data-structures can make their code extremely simple and minimalistic.

I’ve often noticed that, when you give developers a problem, they start off by drawing a flow chart, data-flow diagram or some other diagram which naturally lends them into a procedural design.

Thinking in terms of Objects requires thinking of objects and responsibilities, not so much in terms of flow. Its extremely important to understand the problem, distill it down to the crux by eliminating noise and then building a solution in an incremental fashion. Many developers have a misconception that a good designs has to be fully flushed out before you start. I’ve usually found that a good design emerges in an incremental fashion.

Even though many developers know the definition of high-cohesion, low-coupling and conceptual integrity, when asked to give a software or non-software example, they have a hard time. It goes to show that they don’t really understand the concept in action.

Developers might have read the bookish definition of the various Design Principles. But when asked to find out what design principles were violated in a sample design, they are not able to articulate. Also often you find a lot of misconception about the various principles. For example, Single Responsibility, few developers say that each method should do only one thing and a class should only have one responsibility. What does that actually mean? It turns out that SRP has to do more with temporal symmetry and change. Grouping behavior together from a change point of view.

Even though most developers raise their hands when asked if they know code smells, they have a tough time identifying them or avoiding them in their design. Developers need a lot of hands-on practice to recognize and avoid various code smells. Once you learn to recognize code smells, the next step is to learn how to effectively refactor away from them.

Often I find developers have the most expensive and jazzy tools & IDEs, but when you watch them code, they use their IDEs just as a text-editor. No automated refactoring. Most developers type “Public class xxx” instead of writing the caller code first and then using the IDE to generate the required skeleton code for them. Use of keyboard shortcuts is as rare as seeing solar eclipse. Pretty much most developers practice what I call mouse driven programming. In my experience, better use of IDE and Refactoring tools can give developers at least 5x productivity boost.

I hardly remember meeting a developer who said they don’t know how to unit test. Yet time and again, most developers in my class struggle to write good unit tests. Due to lack of familiarity or lack of practice or stupid misconceptions, most developers skip writing any automated unit tests. Instead they use Sysout/Console.out or other debugging mechanism to validate their logic. Getting better at their unit testing skills and then gradually TDD can really give them a productivity boost and improve their code quality.

I would be extremely happy if every development shop, invested 1 hour each week to organize a refactoring fest, design fest or a coding dojo for their developers to practice and hone their design skills. One can attend as many trainings as they want, but unless they deliberately practice and apply these techniques on job, it will not help.