`

| |

|

Sunday, July 8th, 2012

| A |

Acceptance Criteria/Test, Automation, A/B Testing, Adaptive Planning, Appreciative inquiry |

| B |

Backlog, Business Value, Burndown, Big Visible Charts, Behavior Driven Development, Bugs, Build Monkey, Big Design Up Front (BDUF) |

| C |

Continuous Integration, Continuous Deployment, Continuous Improvement, Celebration, Capacity Planning, Code Smells, Customer Development, Customer Collaboration, Code Coverage, Cyclomatic Complexity, Cycle Time, Collective Ownership, Cross functional Team, C3 (Complexity, Coverage and Churn), Critical Chain |

| D |

Definition of Done (DoD)/Doneness Criteria, Done Done, Daily Scrum, Deliverables, Dojos, Drum Buffer Rope |

| E |

Epic, Evolutionary Design, Energized Work, Exploratory Testing |

| F |

Flow, Fail-Fast, Feature Teams, Five Whys |

| G |

Grooming (Backlog) Meeting, Gemba |

| H |

Hungover Story |

| I |

Impediment, Iteration, Inspect and Adapt, Informative Workspace, Information radiator, Immunization test, IKIWISI (I’ll Know It When I See It) |

| J |

Just-in-time |

| K |

Kanban, Kaizen, Knowledge Workers |

| L |

Last responsible moment, Lead time, Lean Thinking |

| M |

Minimum Viable Product (MVP), Minimum Marketable Features, Mock Objects, Mistake Proofing, MOSCOW Priority, Mindfulness, Muda |

| N |

Non-functional Requirements, Non-value add |

| O |

Onsite customer, Opportunity Backlog, Organizational Transformation, Osmotic Communication |

| P |

Pivot, Product Discovery, Product Owner, Pair Programming, Planning Game, Potentially shippable product, Pull-based-planning, Predictability Paradox |

| Q |

Quality First, Queuing theory |

| R |

Refactoring, Retrospective, Reviews, Release Roadmap, Risk log, Root cause analysis |

| S |

Simplicity, Sprint, Story Points, Standup Meeting, Scrum Master, Sprint Backlog, Self-Organized Teams, Story Map, Sashimi, Sustainable pace, Set-based development, Service time, Spike, Stakeholder, Stop-the-line, Sprint Termination, Single Click Deploy, Systems Thinking, Single Minute Setup, Safe Fail Experimentation |

| T |

Technical Debt, Test Driven Development, Ten minute build, Theme, Tracer bullet, Task Board, Theory of Constraints, Throughput, Timeboxing, Testing Pyramid, Three-Sixty Review |

| U |

User Story, Unit Tests, Ubiquitous Language, User Centered Design |

| V |

Velocity, Value Stream Mapping, Vision Statement, Vanity metrics, Voice of the Customer, Visual controls |

| W |

Work in Progress (WIP), Whole Team, Working Software, War Room, Waste Elimination |

| X |

xUnit |

| Y |

YAGNI (You Aren’t Gonna Need It) |

| Z |

Zero Downtime Deployment, Zen Mind |

Posted in Agile | No Comments »

Saturday, December 4th, 2010

Every day I hear horror stories of how developers are harassed by managers and customers for not having predictable/stable velocity. Developers are penalized when their estimates don’t match their actuals.

If I understand correctly, the reason we moved to story points was to avoid this public humiliation of developers by their managers and customers.

Its probably helped some teams but vast majority of teams today are no better off than before, except that now they have this one extract level of indirection because of story points and then velocity.

We can certainly blame the developers and managers for not understanding story points in the first place. But will that really solve the problem teams are faced with today?

Please consider reading my blog on Story Points are Relative Complexity Estimation techniques. It will help you understand what story points are.

Assuming you know what story point estimates are. Let’s consider that we have some user stories with different story points which help us understand relative complexity estimate.

Then we pick up the most important stories (with different relative complexities) and try to do those stories in our next iteration/sprint.

Let’s say we end up finishing 6 user stories at the end of this iteration/sprint. We add up all the story points for each user story which was completed and we say that’s our velocity.

Next iteration/sprint, we say we can roughly pick up same amount of total story points based on our velocity. And we plan our iterations/sprints this way. We find an oscillating velocity each iteration/sprint, which in theory should normalize over a period of time.

But do you see a fundamental error in this approach?

First we said, 2-story points does not mean 2 times bigger than 1-story point. Let’s say to implement a 1-point story it might take 6 hrs, while to implement a 2-point story it takes 9 hrs. Hence we assigned random numbers (Fibonacci series) to story points in the first place. But then we go and add them all up.

If you still don’t get it, let me explain with an example.

In the nth iteration/sprint, we implemented 6 stories:

- Two 1-point story

- Two 3-point stories

- One 5-point story

- One 8-point story

So our total velocity is ( 2*1 + 2*3 + 5 + 8 ) = 21 points. In 2 weeks we got 21 points done, hence our velocity is 21.

Next iteration/sprit, we’ll take:

* Twenty One 1-point stories

Take a wild guess what would happen?

Yeah I know, hence we don’t take just one iteration/sprint’s velocity, we take an average across many iterations/sprints.

But its a real big stretch to take something which was inherently not meant to be mathematical or statistical in nature and calculate velocity based on it.

If velocity anyway averages out over a period of time, then why not just count the number of stories and use them as your velocity instead of doing story-points?

Over a period of time stories will roughly be broken down to similar size stories and even if they don’t, they will average out.

Isn’t that much simpler (with about the same amount of error) than doing all the story point business?

I used this approach for few years and did certainly benefit from it. No doubt its better than effort estimation upfront. But is this the best we can do?

I know many teams who don’t do effort estimation or relative complexity estimation and moved to a flow model instead of trying to fit thing into the box.

Consider reading my blog on Estimations Considered Harmful.

Posted in Agile, Analysis, Planning | 9 Comments »

Saturday, December 4th, 2010

If we have 2 user stories (A and B), I can say A is smaller than B hence, A is less story points compared to B.

But what does “smaller” mean?

- Less Complex to Understand

- Smaller set of acceptance criteria

- Have prior experience doing something similar to A compared to B

- Have a rough (better/clearer) idea of what needs to be done to implement A compared to B

- A is less volatile and vague compared to B

- and so on…

So, A is less story points compared to B. But clearly we don’t know how much longer its going to take to implement A or B.

Hence we don’t know how much more effort and time will be required to implement B compared to A. All we know at this point is, A is smaller than B.

It is important to understand that Story points are relative complexity estimate NOT effort estimation (how many people, how long will it take?) technique.

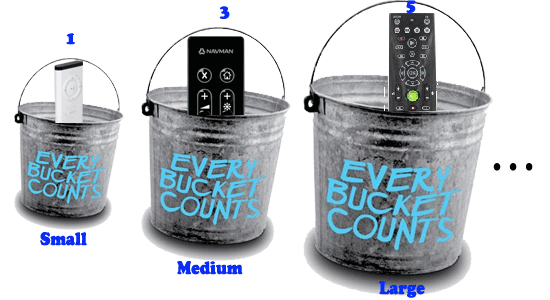

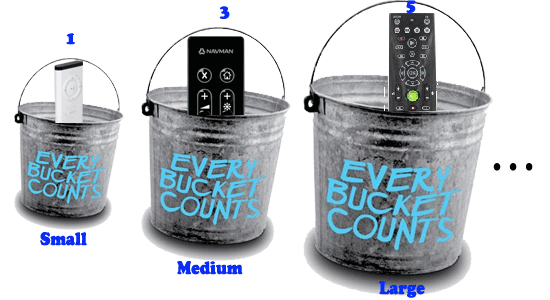

Now if we had 5 stories (A, B, C, D and E) and applied the same thinking, we can come up with different buckets in which we can put these stories in.

Small, Medium, Large, XL, XXL and so on….

And then we can say all stories in the small bucket are 1 story point. All stories in medium bucket are 3 story points, 5 story points, 8 story points and so on…

Why do we give these random numbers instead of sequential numbers (1,2,3,4,…)?

Because we don’t want people to get confused that medium story with 2 points is 2 times bigger than a small story with 1 point.

We cannot apply mathematics or statistics to story points.

Posted in Agile, Analysis | 2 Comments »

Thursday, May 21st, 2009

Let me ask you, what do you mean by productivity?

Number of Lines of Code (LoC) committed per unit time….ahhh….I know measuring LoC is a professional malpractice.

May be, number of features committed per unit time….ahhh….ok…it does not end when I commit the code, it also has to be approved by the QA. So lets say number of features approved by the QA per unit time.

Well all my features are not of same size and they don’t add same value. So really measuring number of features does not give a true picture of productivity. Also just because the QA approved the feature, it does not mean anything. There could be bugs or change requests coming from production.

How about using function points or story points or something like that?

Really? You are not kidding right?

Let me ask you something, what is the goal of software development?

Building software is not an end in itself. Its a means to:

- Enabling your users to be more productive. Making their life easier.

- Helping them to solve real business problems, faster and better

- Providing them a competitive edge

- Providing new services that could not be possible before

- Enabling users to share and find information more rapidly, conveniently

- Automating and simplifying their existing (business) process/workflow

- Innovating and creating kewl stuff to play with

- And of course making money in the process

- And so on…

Keeping this in mind, its lame to measure or think about productivity as amount of software created. I’ve never meet an user who wants more software. What users want is, to solve their problems or to enable them with the least amount of software. (no software is even better).

To be able to really help your users, you need to build the least amount of software that will not only solve the problem but will also delight them. Get them addicted to your software. IMHO, that’s the real goal of software development. One needs to use this goal to measure productivity (or anything useful for that matter).

So

- Checking-in code

- Having the QA approve it

- Having it deployed in production

None of these is worth measuring. For a minute, get out of your tunnel visioned coding world and think about the bigger context in which you operate. Life is much bigger. Local optimization does not really help.

I recommend you read my post about: Why should I bother to learn and practice TDD?

Posted in Agile, Design, Programming, Testing | 14 Comments »

|